Building an Intelligent Knowledge Base: Indexing Microsoft Teams Conversations into Azure AI Search

Introduction

In modern workplaces, valuable knowledge often lives in chat conversations — especially in Microsoft Teams channels. Important discussions, troubleshooting steps, and decisions are made daily, but finding them later can be difficult.

To make this knowledge reusable, I built an automated pipeline that indexes Teams conversations (posts and replies) into an Azure AI Search index. Each message is enriched with OpenAI embeddings, turning plain chat history into a searchable, AI-ready knowledge base.

This article walks you through the complete setup — using Power Automate, Azure OpenAI, and Azure AI Search — to build your own Teams-to-AI index integration.

1) The Goal

We wanted to achieve three main outcomes:

- Capture all Teams messages (including replies) from a selected channel.

- Enrich each message using Azure OpenAI embeddings model for semantic search.

- Index those messages into Azure Cognitive Search to be used later for:

- Building an internal Q&A or AI assistant

- Knowledge retrieval for LLMs

- Centralized team knowledge discovery

2) Architecture Overview

Here’s the high-level flow of our solution:

Teams Channel → Power Automate Flow → Azure OpenAI (Embeddings) → Azure AI Search

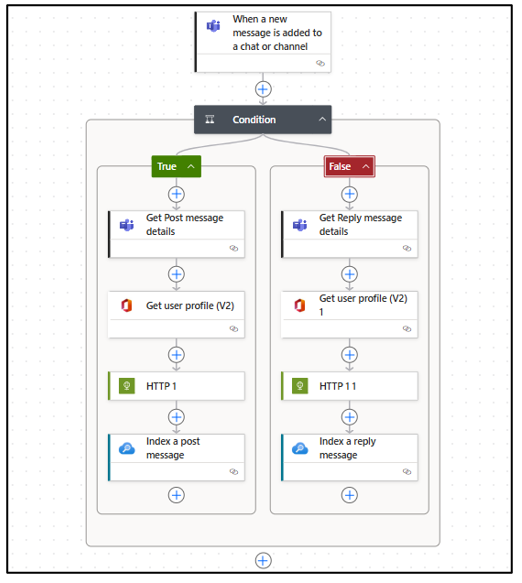

Each new message in Teams triggers a Power Automate flow that:

- Detects whether it’s a new post or a reply

- Retrieves message details and user info

- Calls Azure OpenAI to generate a vector embedding

- Indexes the message and metadata into Azure AI Search

3) Setting up the Trigger

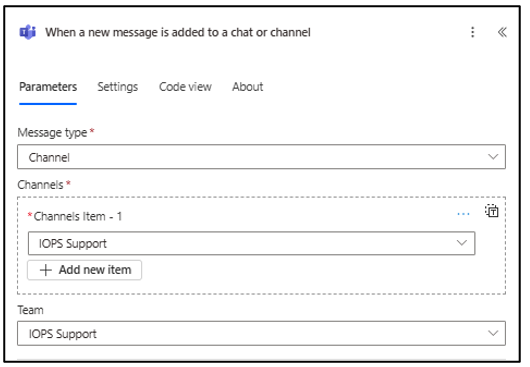

We start the flow with the Microsoft Teams connector action:

When a new message is added to a chat or channel

- Message Type: Channel

- Team: Your Teams Name

- Channel: Your Channel Name

This ensures the flow activates whenever a new message or reply is posted. Also please ensure you are part of the team’s channel else it will not be visible to you in above action.

4) Distinguishing Posts from Replies

The next step in the flow is a Condition that checks whether the incoming message is a root post or a reply.

We use this logic:

equals(

triggerBody()?[‘value’][0][‘replyToMessageId’],

triggerBody()?[‘value’][0][‘messageId’]

)

If the replyToMessageId equals the messageId, it’s a new post. Otherwise, it’s a reply.

5) Handling a New Post

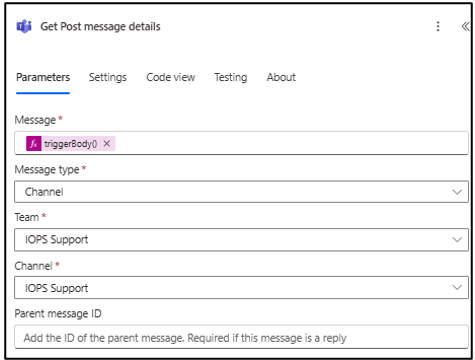

For new posts, the “True” branch of the condition handles the workflow:

- Get Post Message Details – Retrieves full post or reply content. For this you can use this action- Get message details

- Get User Profile (V2) – Fetches the author’s details.

- HTTP Request – Sends the message text to Azure OpenAI for embedding.

- Index Message – Uploads the post and embedding to Azure AI Search using action-Index a document

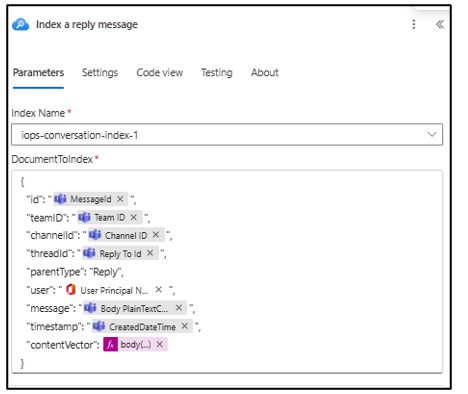

6) Handling a Reply

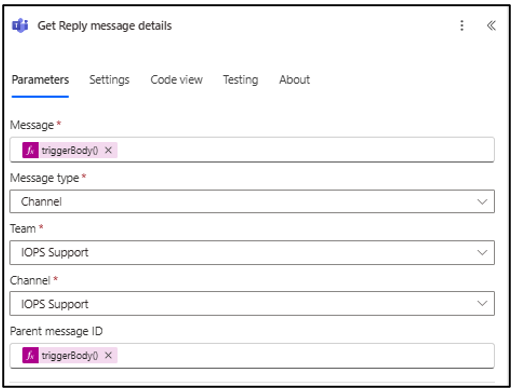

For replies, the “False” branch is nearly identical — except it ensures the message context (thread) is preserved by using the parent message ID.

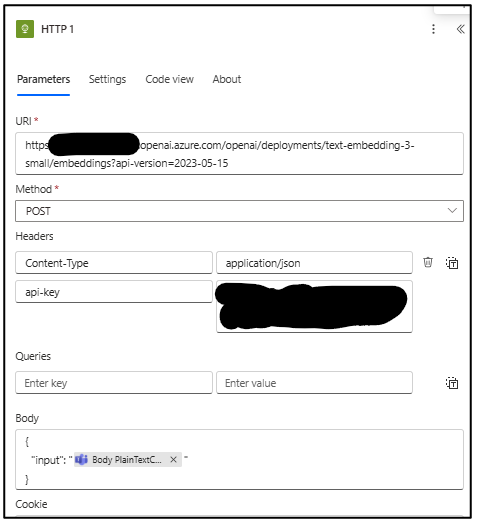

7) Generating Embeddings with Azure OpenAI

In both branches, we call the Azure OpenAI Embeddings API using the HTTP action. Before generating embeddings, make sure you’ve deployed an embedding model in your Azure OpenAI resource — for example, text-embedding-3-small or text-embedding-3-large or any other as per your choice. You can do this directly from the Azure AI Foundry (formerly Azure OpenAI Studio) by navigating to the Deployments section and selecting your desired embedding model. Once deployed, you’ll get a deployment name, API endpoint, and key — these are what you’ll use in Power Automate or any API call to generate embeddings. This ensures your flow can securely call the deployed model via the Azure OpenAI REST API using your own resource and credentials.

Configuration:

- Method: POST

- URI:

- https://<your-resource>.openai.azure.com/openai/deployments/text-embedding-3-small/embeddings?api-version=2023-05-15

- Headers:

- Content-Type: application/json

- api-key: <your-key>

- Body:

{

“input”: “@{body(‘Get_Post_message_details’)?[‘bodyPlainText’]}”

}

This returns a numeric vector representing the message’s semantic meaning — ideal for similarity-based retrieval later.

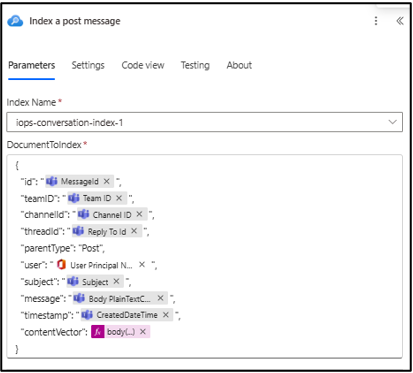

8) Indexing the Data in Azure Cognitive Search

The final step is pushing the enriched message into your search index. We use the Index a document action.

For a post message:

For a reply message, the structure is similar but with “parentType”: “Reply”.

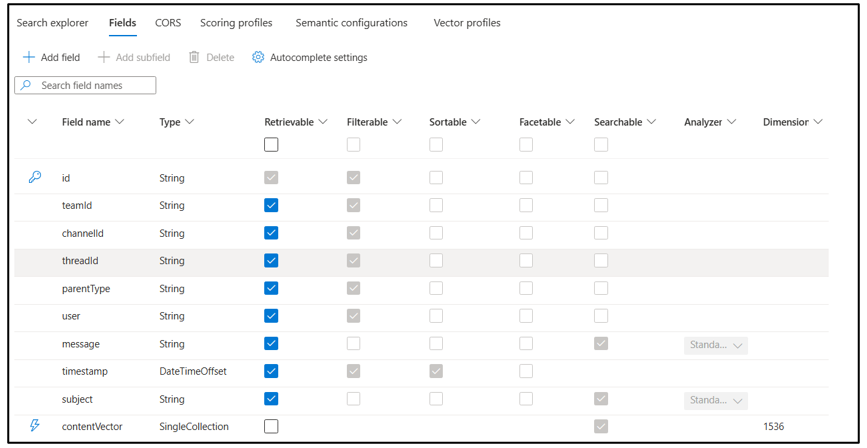

Before running the indexing process, make sure your Azure AI Search index is properly configured.

You must have all required fields already created — including a vector embedding field (for storing embeddings from Azure OpenAI) and other metadata fields such as id, content, title, or category as per your data schema.

The vector field is essential for enabling semantic search and similarity queries, so double-check that it has the correct data type (SingleCollection) and vector configuration in the index definition.

9) The Result: A Searchable, AI-Ready Conversation Index

Once indexed, all Teams messages and replies are stored in Azure Cognitive Search with vector embeddings.

This allows you to perform semantic search or feed historical context into a copilot or internal knowledge assistant.

You can now:

- Query messages semantically (“Who mentioned network latency last week?”)

- Retrieve contextual thread histories for an agent

- Filter by team, channel, or user metadata

10) Future Possibilities

With Teams conversations indexed, you can extend this setup to:

- Build an internal chatbot powered by your organization’s conversation data.

- Summarize thread discussions automatically using OpenAI GPT models.

- Discover insights and recurring issues from chat patterns.

- Integrate with Microsoft Copilot or other RAG-based systems.

11) Key Takeaways

- Teams data contains valuable institutional knowledge — capture it before it’s lost.

- Azure OpenAI embeddings + Cognitive Search turn unstructured chat into structured, discoverable content.

- Power Automate makes this pipeline low-code, repeatable, and enterprise-ready.

By indexing your Teams conversations, you’re not just archiving data — you’re building a living knowledge graph that can power future AI systems.

Abotts Partners with singapore based tech giant to help migrate their public sector customer from Sybase to SQL server.

Abotts Partners with singapore based tech giant to help migrate their public sector customer from Sybase to SQL server.

Abotts partners with NYPL to integrate with their partner libraries.

Abotts partners with NYPL to integrate with their partner libraries.

Upworks Inc partners with ABOTTS to build their Oracle Cloud Infrastructure (OCI) and migrate their custom applications to OCI.

Upworks Inc partners with ABOTTS to build their Oracle Cloud Infrastructure (OCI) and migrate their custom applications to OCI.

Abotts Inc Partners with Gnorth consulting to deploy exadata and ODA for a large public sector customer.

Abotts Inc Partners with Gnorth consulting to deploy exadata and ODA for a large public sector customer.